YugabyteDB - Row Level Geo Partitioning

Overview

This capability can simplify compliance of data privacy laws, data sovereignty laws, etc. It can make the overall architecture of the application very simple. Here is my stab at this feature. I followed the documentation for most part. I added only the cluster management parts to the whole guide for a quick self-drive.

Instead of working with actual cloud, I ran the whole setup on my machine. This will need about 2GB of free RAM. At its peak, there will be 3 master (100MB), 12 tserver(100MB) and 12 postgres (50MB) processes. I wanted to remove the network out of the picture to understand the data persistance portion of YugabyteDB, hence the local machine cluster.

Pre-requisites

You will require YugabyteDB binaries for your platform. On Macs with Apple Silicon, you should have rosetta

YugabyteDB binary (Mac/Linux-x86/ Linux-aarch64)

Mac

1curl -O https://downloads.yugabyte.com/releases/2.13.0.0/yugabyte-2.13.0.0-b42-darwin-x86_64.tar.gz 2tar xvfz yugabyte-2.13.0.0-b42-darwin-x86_64.tar.gz 3echo "export PATH=$PWD/yugabyte-2.13.0.0/bin:\$PATH" >> $HOME/.bashrc 4source $HOME/.bashrcOn Macs with Apple Silicon, you should have Rosetta installed.

Linux

1wget https://downloads.yugabyte.com/releases/2.13.0.0/yugabyte-2.13.0.0-b42-linux-x86_64.tar.gz 2tar xvfz yugabyte-2.13.0.0-b42-linux-x86_64.tar.gz 3echo "export PATH=$PWD/yugabyte-2.13.0.0/bin:\$PATH" >> $HOME/.bashrc 4source $HOME/.bashrc 5$PWD/yugabyte-2.13.0.0/bin/post_install.shAdditional 12 alias/IPs for loopback interface

Mac/Linux

This is an ephemeral setup and will get reset ones you reboot machine. You can re-run the same command to setup interfaces, in case of a reboot.

1for i in {1..12} 2do 3 sudo ifconfig lo0 alias 127.0.0.$i up 4doneCheck the aliases

1 ifconfig lo0Output

1 lo0: flags=8049<UP,LOOPBACK,RUNNING,MULTICAST> mtu 16384 2 options=1203<RXCSUM,TXCSUM,TXSTATUS,SW_TIMESTAMP> 3 inet 127.0.0.1 netmask 0xff000000 4 inet6 ::1 prefixlen 128 5 inet6 fe80::1%lo0 prefixlen 64 scopeid 0x1 6 inet 127.0.0.2 netmask 0xff000000 7 inet 127.0.0.3 netmask 0xff000000 8 inet 127.0.0.4 netmask 0xff000000 9 inet 127.0.0.5 netmask 0xff000000 10 inet 127.0.0.6 netmask 0xff000000 11 inet 127.0.0.7 netmask 0xff000000 12 inet 127.0.0.8 netmask 0xff000000 13 inet 127.0.0.9 netmask 0xff000000 14 inet 127.0.0.10 netmask 0xff000000 15 inet 127.0.0.11 netmask 0xff000000 16 inet 127.0.0.12 netmask 0xff000000 17 nd6 options=201<PERFORMNUD,DAD>

Setup Local Cluster

Clean up any existing cluster (in case you are repeating or followed another tutorial).

1yb-ctl destroy --data_dir $PWD/dataOutput

1Destroying cluster.Create a new cluster. This cluster will have replication factor of 3, and will have nodes spread in 3 regions. 1 node per region. We will use us-west-2, eu-central-1 and ap-south-1 regions of aws. And we will use the us-west-2a, eu-central-1a and ap-south-1a zones, from these regions.

1yb-ctl start --data_dir $PWD/data --rf 3 --placement_info "aws.us-west-2.us-west-2a,aws.eu-central-1.eu-central-1a,aws.ap-south-1.ap-south-1a"NOTE for Mac: You will get multiple (6) prompts/dialogs stating yb-tserver or yb-master to accept incoming connections. Allow all of them. This will happen later when we add more nodes. Repeat

Output

1Creating cluster. 2Waiting for cluster to be ready. 3---------------------------------------------------------------------------------------------------- 4| Node Count: 3 | Replication Factor: 3 | 5---------------------------------------------------------------------------------------------------- 6| JDBC : jdbc:postgresql://127.0.0.1:5433/yugabyte | 7| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh | 8| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh | 9| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli | 10| Web UI : http://127.0.0.1:7000/ | 11| Cluster Data : /tmp/demo/data | 12---------------------------------------------------------------------------------------------------- 13 14For more info, please use: yb-ctl --data_dir /tmp/demo/data status(Optional) Check the current cluster configuration

1yb-ctl status --data_dir $PWD/dataOutput

1---------------------------------------------------------------------------------------------------- 2| Node Count: 3 | Replication Factor: 3 | 3---------------------------------------------------------------------------------------------------- 4| JDBC : jdbc:postgresql://127.0.0.1:5433/yugabyte | 5| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh | 6| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh | 7| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli | 8| Web UI : http://127.0.0.1:7000/ | 9| Cluster Data : /tmp/demo/data | 10---------------------------------------------------------------------------------------------------- 11---------------------------------------------------------------------------------------------------- 12| Node 1: yb-tserver (pid 90648), yb-master (pid 90639) | 13---------------------------------------------------------------------------------------------------- 14| JDBC : jdbc:postgresql://127.0.0.1:5433/yugabyte | 15| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh | 16| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh | 17| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli | 18| data-dir[0] : /tmp/demo/data/node-1/disk-1/yb-data | 19| yb-tserver Logs : /tmp/demo/data/node-1/disk-1/yb-data/tserver/logs | 20| yb-master Logs : /tmp/demo/data/node-1/disk-1/yb-data/master/logs | 21---------------------------------------------------------------------------------------------------- 22---------------------------------------------------------------------------------------------------- 23| Node 2: yb-tserver (pid 90651), yb-master (pid 90642) | 24---------------------------------------------------------------------------------------------------- 25| JDBC : jdbc:postgresql://127.0.0.2:5433/yugabyte | 26| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.2 | 27| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.2 | 28| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.2 | 29| data-dir[0] : /tmp/demo/data/node-2/disk-1/yb-data | 30| yb-tserver Logs : /tmp/demo/data/node-2/disk-1/yb-data/tserver/logs | 31| yb-master Logs : /tmp/demo/data/node-2/disk-1/yb-data/master/logs | 32---------------------------------------------------------------------------------------------------- 33---------------------------------------------------------------------------------------------------- 34| Node 3: yb-tserver (pid 90654), yb-master (pid 90645) | 35---------------------------------------------------------------------------------------------------- 36| JDBC : jdbc:postgresql://127.0.0.3:5433/yugabyte | 37| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.3 | 38| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.3 | 39| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.3 | 40| data-dir[0] : /tmp/demo/data/node-3/disk-1/yb-data | 41| yb-tserver Logs : /tmp/demo/data/node-3/disk-1/yb-data/tserver/logs | 42| yb-master Logs : /tmp/demo/data/node-3/disk-1/yb-data/master/logs | 43----------------------------------------------------------------------------------------------------(Optional) Launch the Master UI in browser for looking at the state of cluster

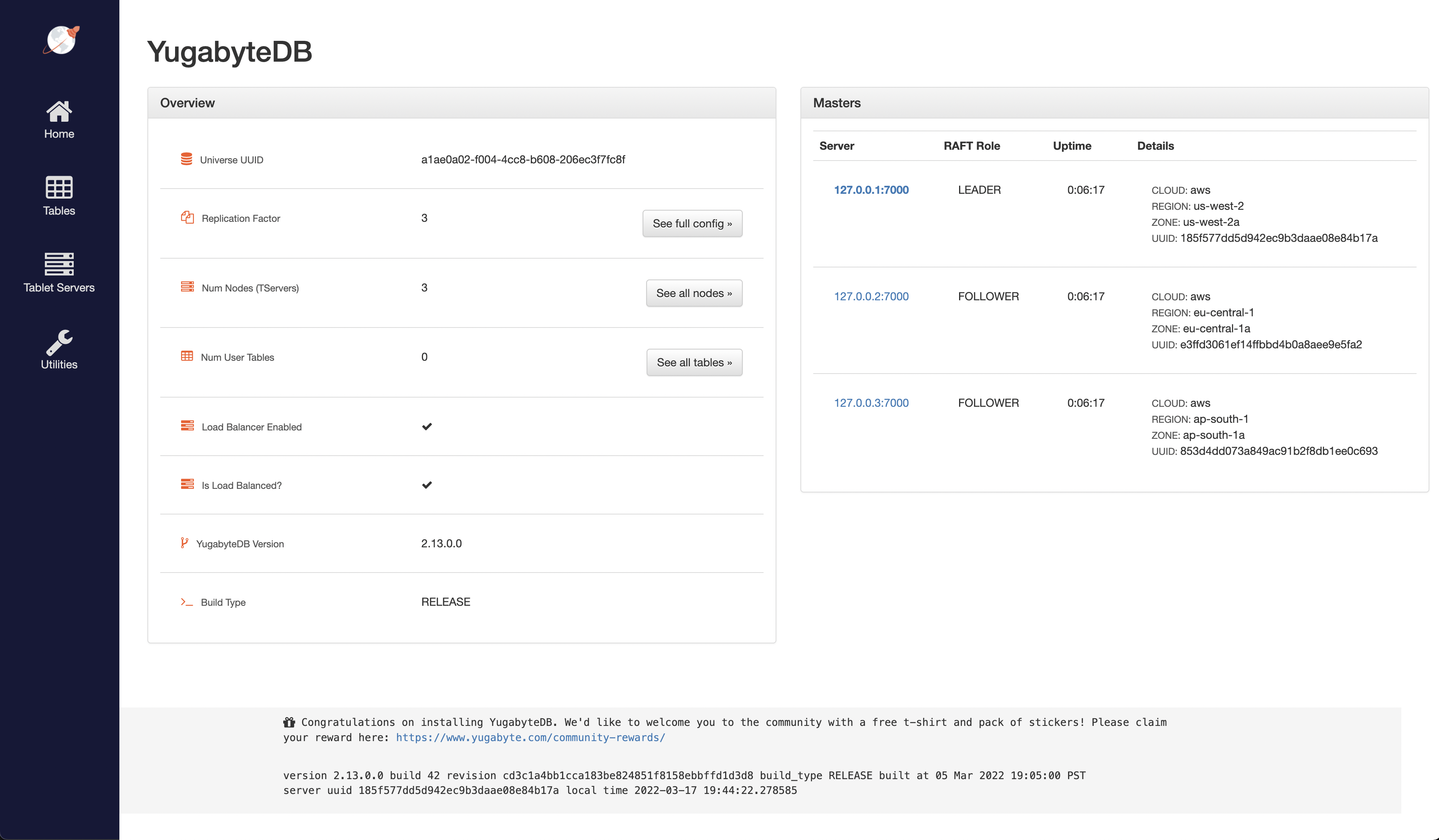

You can examine the cluster config JSON by clicking on Home > Overview > (See full config) or click here

Config

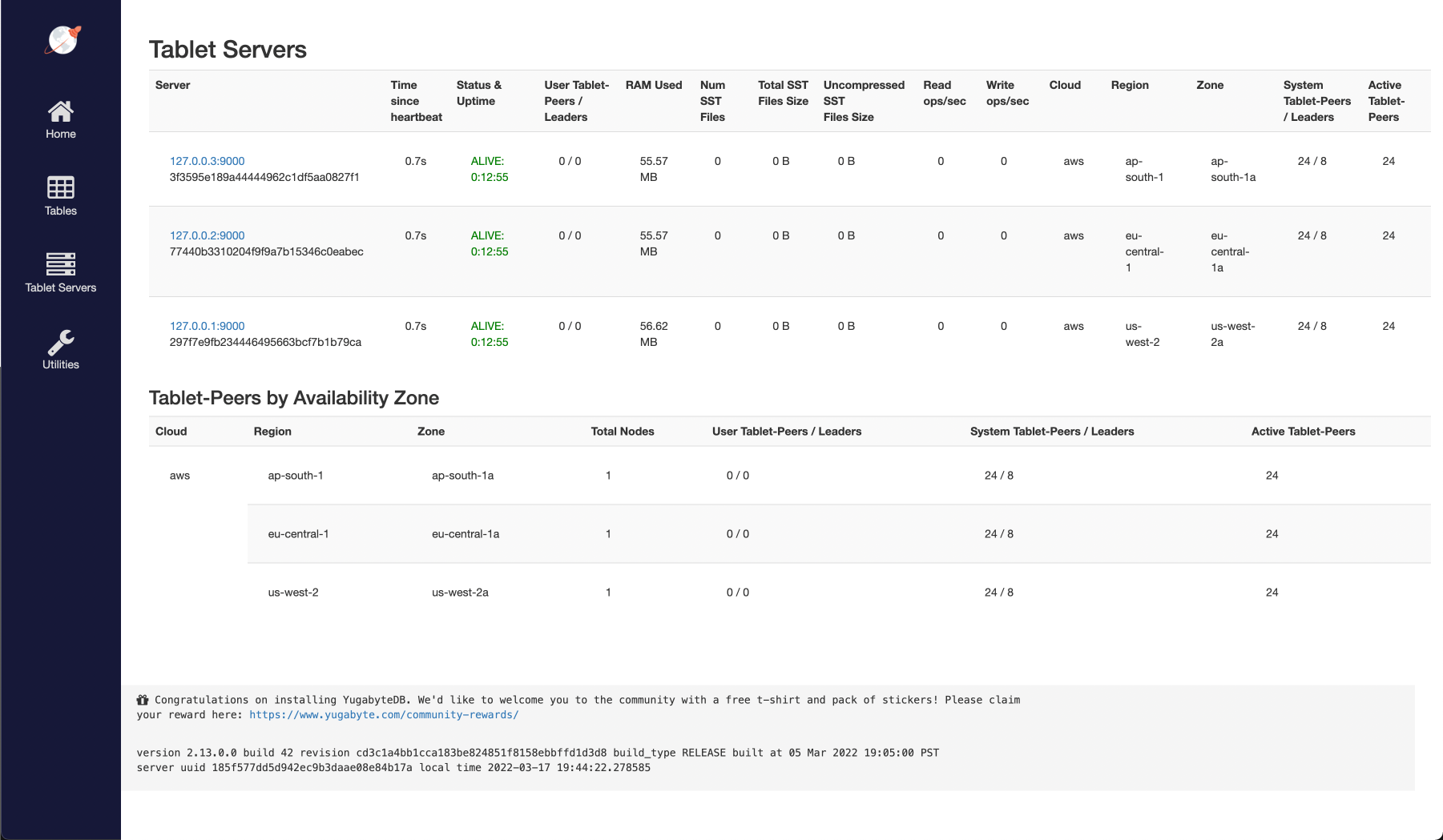

1version: 1 2replication_info { 3 live_replicas { 4 num_replicas: 3 5 placement_blocks { 6 cloud_info { 7 placement_cloud: "aws" 8 placement_region: "eu-central-1" 9 placement_zone: "eu-central-1a" 10 } 11 min_num_replicas: 1 12 } 13 placement_blocks { 14 cloud_info { 15 placement_cloud: "aws" 16 placement_region: "ap-south-1" 17 placement_zone: "ap-south-1a" 18 } 19 min_num_replicas: 1 20 } 21 placement_blocks { 22 cloud_info { 23 placement_cloud: "aws" 24 placement_region: "us-west-2" 25 placement_zone: "us-west-2a" 26 } 27 min_num_replicas: 1 28 } 29 } 30} 31cluster_uuid: "a1ae0a02-f004-4cc8-b608-206ec3f7fc8f"You can check TServer details by clicking Home > Overview > (See all nodes) or click here

Screenshot

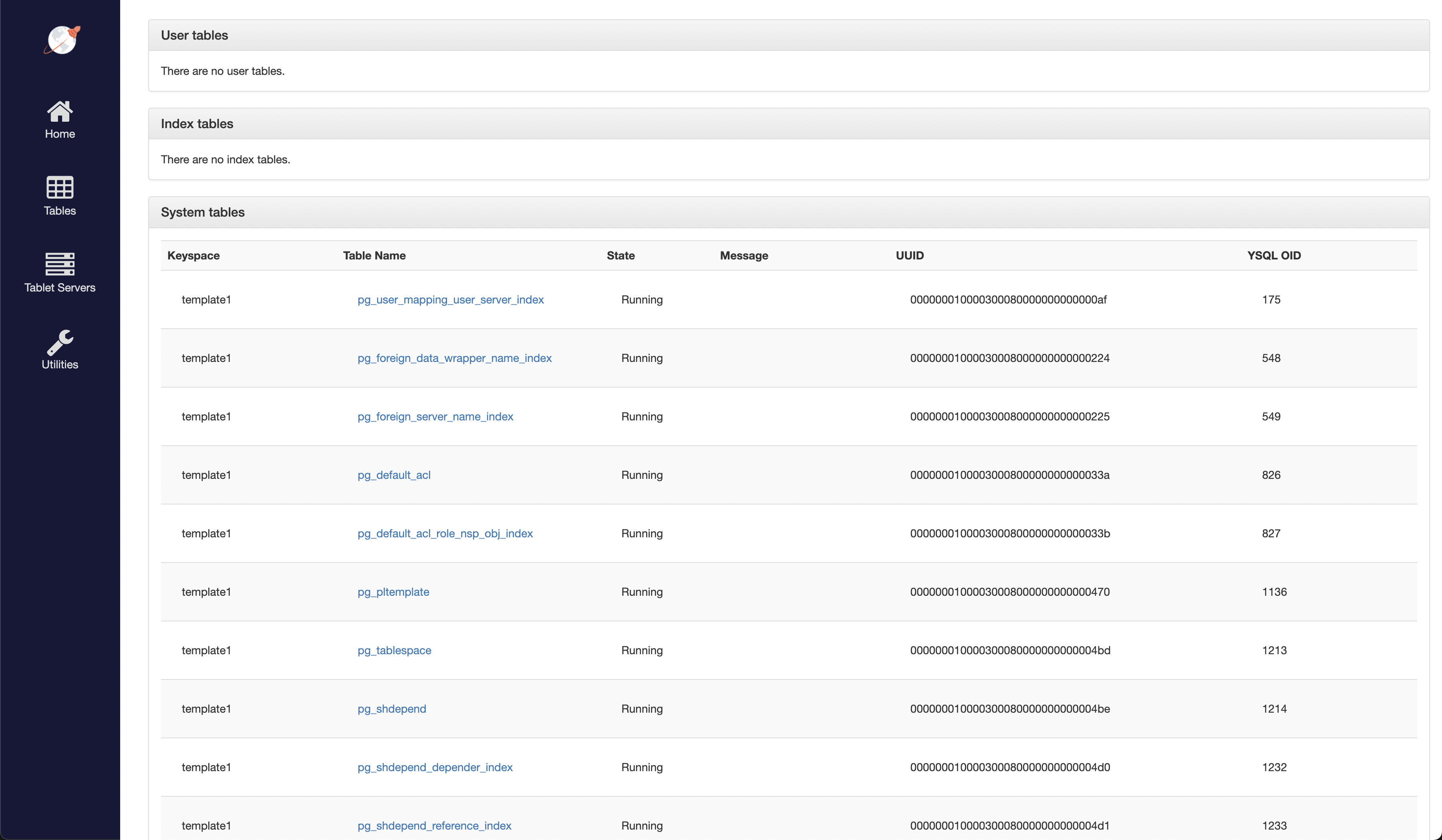

You can check tables by clicking Home > Overview > (See all tables) or click here

Screenshot

Back on command prompt, lets add 2 additional nodes for each of the 3 region. So, 6 additional nodes have to be added. This will make total node count to 9. We want to keep each node is a separate zone for intra-region resiliency.

1 2yb-ctl --data_dir $PWD/data add_node --placement_info "aws.us-west-2.us-west-2b" 3yb-ctl --data_dir $PWD/data add_node --placement_info "aws.us-west-2.us-west-2c" 4 5yb-ctl --data_dir $PWD/data add_node --placement_info "aws.eu-central-1.eu-central-1b" 6yb-ctl --data_dir $PWD/data add_node --placement_info "aws.eu-central-1.eu-central-1c" 7 8yb-ctl --data_dir $PWD/data add_node --placement_info "aws.ap-south-1.ap-south-1b" 9yb-ctl --data_dir $PWD/data add_node --placement_info "aws.ap-south-1.ap-south-1c"Output

1Adding node. 2Waiting for cluster to be ready. 3---------------------------------------------------------------------------------------------------- 4| Node 4: yb-tserver (pid 91265) | 5---------------------------------------------------------------------------------------------------- 6| JDBC : jdbc:postgresql://127.0.0.4:5433/yugabyte | 7| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.4 | 8| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.4 | 9| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.4 | 10| data-dir[0] : /tmp/demo/data/node-4/disk-1/yb-data | 11| yb-tserver Logs : /tmp/demo/data/node-4/disk-1/yb-data/tserver/logs | 12---------------------------------------------------------------------------------------------------- 13Adding node. 14Waiting for cluster to be ready. 15---------------------------------------------------------------------------------------------------- 16| Node 5: yb-tserver (pid 91323) | 17---------------------------------------------------------------------------------------------------- 18| JDBC : jdbc:postgresql://127.0.0.5:5433/yugabyte | 19| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.5 | 20| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.5 | 21| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.5 | 22| data-dir[0] : /tmp/demo/data/node-5/disk-1/yb-data | 23| yb-tserver Logs : /tmp/demo/data/node-5/disk-1/yb-data/tserver/logs | 24---------------------------------------------------------------------------------------------------- 25Adding node. 26Waiting for cluster to be ready. 27---------------------------------------------------------------------------------------------------- 28| Node 6: yb-tserver (pid 91385) | 29---------------------------------------------------------------------------------------------------- 30| JDBC : jdbc:postgresql://127.0.0.6:5433/yugabyte | 31| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.6 | 32| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.6 | 33| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.6 | 34| data-dir[0] : /tmp/demo/data/node-6/disk-1/yb-data | 35| yb-tserver Logs : /tmp/demo/data/node-6/disk-1/yb-data/tserver/logs | 36---------------------------------------------------------------------------------------------------- 37Adding node. 38Waiting for cluster to be ready. 39---------------------------------------------------------------------------------------------------- 40| Node 7: yb-tserver (pid 91449) | 41---------------------------------------------------------------------------------------------------- 42| JDBC : jdbc:postgresql://127.0.0.7:5433/yugabyte | 43| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.7 | 44| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.7 | 45| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.7 | 46| data-dir[0] : /tmp/demo/data/node-7/disk-1/yb-data | 47| yb-tserver Logs : /tmp/demo/data/node-7/disk-1/yb-data/tserver/logs | 48---------------------------------------------------------------------------------------------------- 49Adding node. 50Waiting for cluster to be ready. 51---------------------------------------------------------------------------------------------------- 52| Node 8: yb-tserver (pid 91495) | 53---------------------------------------------------------------------------------------------------- 54| JDBC : jdbc:postgresql://127.0.0.8:5433/yugabyte | 55| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.8 | 56| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.8 | 57| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.8 | 58| data-dir[0] : /tmp/demo/data/node-8/disk-1/yb-data | 59| yb-tserver Logs : /tmp/demo/data/node-8/disk-1/yb-data/tserver/logs | 60---------------------------------------------------------------------------------------------------- 61Adding node. 62Waiting for cluster to be ready. 63---------------------------------------------------------------------------------------------------- 64| Node 9: yb-tserver (pid 91549) | 65---------------------------------------------------------------------------------------------------- 66| JDBC : jdbc:postgresql://127.0.0.9:5433/yugabyte | 67| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.9 | 68| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.9 | 69| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.9 | 70| data-dir[0] : /tmp/demo/data/node-9/disk-1/yb-data | 71| yb-tserver Logs : /tmp/demo/data/node-9/disk-1/yb-data/tserver/logs | 72----------------------------------------------------------------------------------------------------(Optional) Check the current cluster configuration

1yb-ctl status --data_dir $PWD/dataOutput

1---------------------------------------------------------------------------------------------------- 2| Node Count: 9 | Replication Factor: 3 | 3---------------------------------------------------------------------------------------------------- 4| JDBC : jdbc:postgresql://127.0.0.1:5433/yugabyte | 5| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh | 6| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh | 7| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli | 8| Web UI : http://127.0.0.1:7000/ | 9| Cluster Data : /tmp/demo/data | 10---------------------------------------------------------------------------------------------------- 11---------------------------------------------------------------------------------------------------- 12| Node 1: yb-tserver (pid 90648), yb-master (pid 90639) | 13---------------------------------------------------------------------------------------------------- 14| JDBC : jdbc:postgresql://127.0.0.1:5433/yugabyte | 15| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh | 16| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh | 17| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli | 18| data-dir[0] : /tmp/demo/data/node-1/disk-1/yb-data | 19| yb-tserver Logs : /tmp/demo/data/node-1/disk-1/yb-data/tserver/logs | 20| yb-master Logs : /tmp/demo/data/node-1/disk-1/yb-data/master/logs | 21---------------------------------------------------------------------------------------------------- 22---------------------------------------------------------------------------------------------------- 23| Node 2: yb-tserver (pid 90651), yb-master (pid 90642) | 24---------------------------------------------------------------------------------------------------- 25| JDBC : jdbc:postgresql://127.0.0.2:5433/yugabyte | 26| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.2 | 27| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.2 | 28| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.2 | 29| data-dir[0] : /tmp/demo/data/node-2/disk-1/yb-data | 30| yb-tserver Logs : /tmp/demo/data/node-2/disk-1/yb-data/tserver/logs | 31| yb-master Logs : /tmp/demo/data/node-2/disk-1/yb-data/master/logs | 32---------------------------------------------------------------------------------------------------- 33---------------------------------------------------------------------------------------------------- 34| Node 3: yb-tserver (pid 90654), yb-master (pid 90645) | 35---------------------------------------------------------------------------------------------------- 36| JDBC : jdbc:postgresql://127.0.0.3:5433/yugabyte | 37| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.3 | 38| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.3 | 39| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.3 | 40| data-dir[0] : /tmp/demo/data/node-3/disk-1/yb-data | 41| yb-tserver Logs : /tmp/demo/data/node-3/disk-1/yb-data/tserver/logs | 42| yb-master Logs : /tmp/demo/data/node-3/disk-1/yb-data/master/logs | 43---------------------------------------------------------------------------------------------------- 44---------------------------------------------------------------------------------------------------- 45| Node 4: yb-tserver (pid 91265) | 46---------------------------------------------------------------------------------------------------- 47| JDBC : jdbc:postgresql://127.0.0.4:5433/yugabyte | 48| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.4 | 49| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.4 | 50| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.4 | 51| data-dir[0] : /tmp/demo/data/node-4/disk-1/yb-data | 52| yb-tserver Logs : /tmp/demo/data/node-4/disk-1/yb-data/tserver/logs | 53---------------------------------------------------------------------------------------------------- 54---------------------------------------------------------------------------------------------------- 55| Node 5: yb-tserver (pid 91323) | 56---------------------------------------------------------------------------------------------------- 57| JDBC : jdbc:postgresql://127.0.0.5:5433/yugabyte | 58| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.5 | 59| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.5 | 60| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.5 | 61| data-dir[0] : /tmp/demo/data/node-5/disk-1/yb-data | 62| yb-tserver Logs : /tmp/demo/data/node-5/disk-1/yb-data/tserver/logs | 63---------------------------------------------------------------------------------------------------- 64---------------------------------------------------------------------------------------------------- 65| Node 6: yb-tserver (pid 91385) | 66---------------------------------------------------------------------------------------------------- 67| JDBC : jdbc:postgresql://127.0.0.6:5433/yugabyte | 68| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.6 | 69| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.6 | 70| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.6 | 71| data-dir[0] : /tmp/demo/data/node-6/disk-1/yb-data | 72| yb-tserver Logs : /tmp/demo/data/node-6/disk-1/yb-data/tserver/logs | 73---------------------------------------------------------------------------------------------------- 74---------------------------------------------------------------------------------------------------- 75| Node 7: yb-tserver (pid 91449) | 76---------------------------------------------------------------------------------------------------- 77| JDBC : jdbc:postgresql://127.0.0.7:5433/yugabyte | 78| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.7 | 79| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.7 | 80| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.7 | 81| data-dir[0] : /tmp/demo/data/node-7/disk-1/yb-data | 82| yb-tserver Logs : /tmp/demo/data/node-7/disk-1/yb-data/tserver/logs | 83---------------------------------------------------------------------------------------------------- 84---------------------------------------------------------------------------------------------------- 85| Node 8: yb-tserver (pid 91495) | 86---------------------------------------------------------------------------------------------------- 87| JDBC : jdbc:postgresql://127.0.0.8:5433/yugabyte | 88| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.8 | 89| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.8 | 90| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.8 | 91| data-dir[0] : /tmp/demo/data/node-8/disk-1/yb-data | 92| yb-tserver Logs : /tmp/demo/data/node-8/disk-1/yb-data/tserver/logs | 93---------------------------------------------------------------------------------------------------- 94---------------------------------------------------------------------------------------------------- 95| Node 9: yb-tserver (pid 91549) | 96---------------------------------------------------------------------------------------------------- 97| JDBC : jdbc:postgresql://127.0.0.9:5433/yugabyte | 98| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.9 | 99| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.9 | 100| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.9 | 101| data-dir[0] : /tmp/demo/data/node-9/disk-1/yb-data | 102| yb-tserver Logs : /tmp/demo/data/node-9/disk-1/yb-data/tserver/logs | 103----------------------------------------------------------------------------------------------------(Optional) Check the TServers details by clicking Home > Overview > (See all nodes) or click here

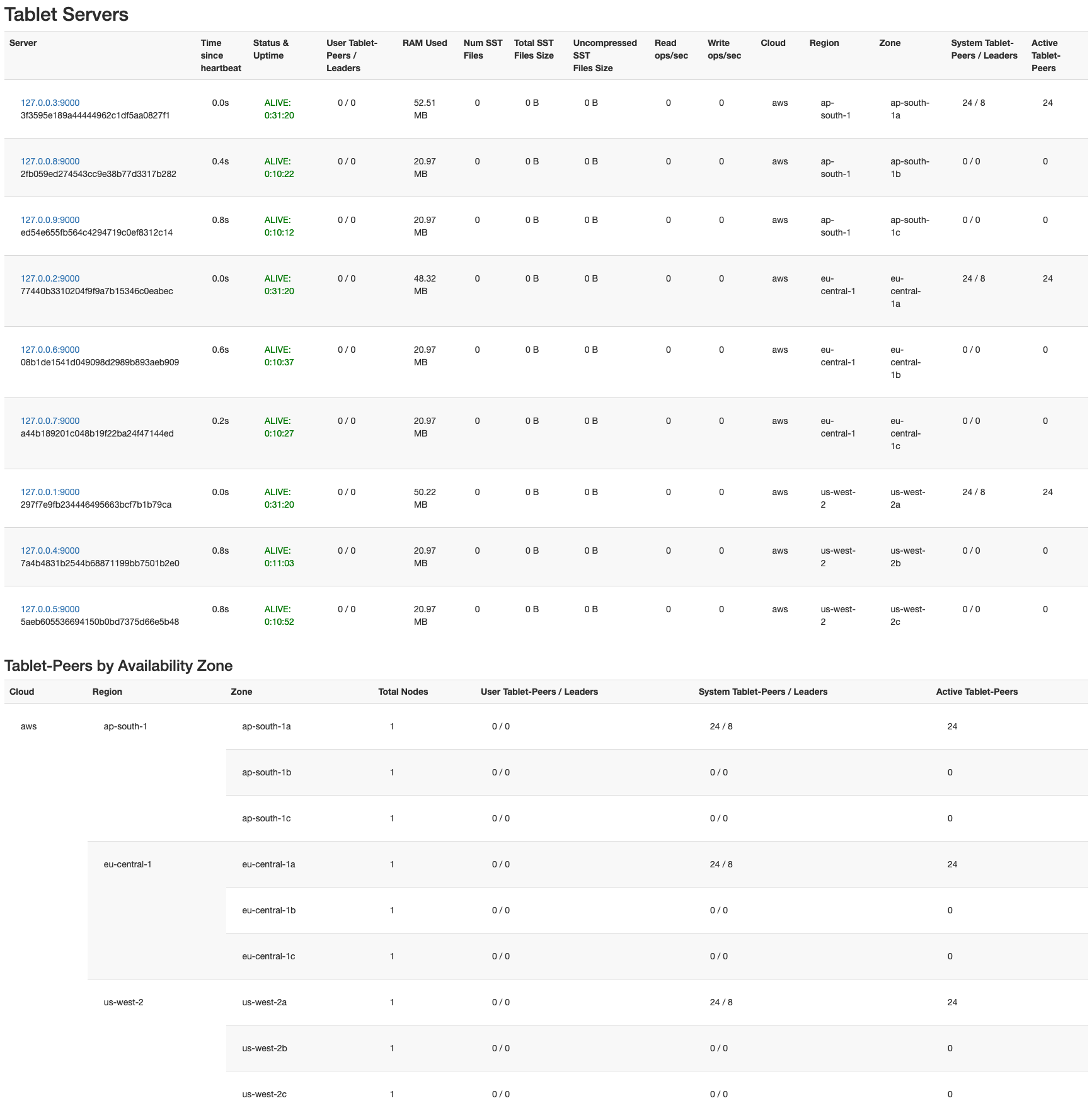

Screenshot

Now, we have created a multi-region cluster across 3 regions and 9 zones. Each zone has 1 node in it. Table below shows Region/Zone wise list of nodes with roles.

| Region | Zone | IP | Role |

|---|---|---|---|

| ap-south-1 | ap-south-1a | 127.0.0.3 | master,tserver |

| ap-south-1 | ap-south-1b | 127.0.0.8 | tserver |

| ap-south-1 | ap-south-1c | 127.0.0.9 | tserver |

| us-west-2 | us-west-2a | 127.0.0.1 | master,tserver |

| us-west-2 | us-west-2b | 127.0.0.4 | tserver |

| us-west-2 | us-west-2c | 127.0.0.5 | tserver |

| eu-central-1 | eu-central-1a | 127.0.0.2 | master,tserver |

| eu-central-1 | eu-central-1b | 127.0.0.6 | tserver |

| eu-central-1 | eu-central-1c | 127.0.0.7 | tserver |

Global Transactions

In this exercise, we will create a single bank_transactions table. Out aim is to place the transaction records for each geo in its own nodes. This is simulating the enforcement of data privacy/sovereignty rules with in a single database. We will use SQL queries to simulate application interactions.

Lets launch YSQL Shell to perform all the SQL query operations.

1ysqlsh

Output

1ysqlsh (11.2-YB-2.13.0.0-b0)

2Type "help" for help.

3

4yugabyte=#

You may exit YSQL Shell anytime by typing \q<enter>, <ctrl>+d or quit;<enter>.

Setup tablespace for each geo

Create geo-partitioned tablespace for

us-west-21CREATE TABLESPACE us_west_2_tablespace WITH ( 2 replica_placement='{"num_replicas": 3, "placement_blocks": 3 [{"cloud":"aws","region":"us-west-2","zone":"us-west-2a","min_num_replicas":1}, 4 {"cloud":"aws","region":"us-west-2","zone":"us-west-2b","min_num_replicas":1}, 5 {"cloud":"aws","region":"us-west-2","zone":"us-west-2c","min_num_replicas":1}]}' 6);Output

1CREATE TABLESPACECreate geo-partitioned tablespace for

eu-central-11 2CREATE TABLESPACE eu_central_1_tablespace WITH ( 3 replica_placement='{"num_replicas": 3, "placement_blocks": 4 [{"cloud":"aws","region":"eu-central-1","zone":"eu-central-1a","min_num_replicas":1}, 5 {"cloud":"aws","region":"eu-central-1","zone":"eu-central-1b","min_num_replicas":1}, 6 {"cloud":"aws","region":"eu-central-1","zone":"eu-central-1c","min_num_replicas":1}]}' 7);Output

1CREATE TABLESPACECreate geo-partitioned tablespace for

ap-south-11CREATE TABLESPACE ap_south_1_tablespace WITH ( 2 replica_placement='{"num_replicas": 3, "placement_blocks": 3 [{"cloud":"aws","region":"ap-south-1","zone":"ap-south-1a","min_num_replicas":1}, 4 {"cloud":"aws","region":"ap-south-1","zone":"ap-south-1b","min_num_replicas":1}, 5 {"cloud":"aws","region":"ap-south-1","zone":"ap-south-1c","min_num_replicas":1}]}' 6);Output

1CREATE TABLESPACE(Optional) List all the tablespaces

1\dbOutput

1 List of tablespaces 2 Name | Owner | Location 3-------------------------+----------+---------- 4 ap_south_1_tablespace | yugabyte | 5 eu_central_1_tablespace | yugabyte | 6 pg_default | postgres | 7 pg_global | postgres | 8 us_west_2_tablespace | yugabyte | 9(5 rows)

Create Transaction Table and Partitions

Create

bank_transactiontable1CREATE TABLE bank_transactions ( 2 user_id INTEGER NOT NULL, 3 account_id INTEGER NOT NULL, 4 geo_partition VARCHAR, 5 account_type VARCHAR NOT NULL, 6 amount NUMERIC NOT NULL, 7 txn_type VARCHAR NOT NULL, 8 created_at TIMESTAMP DEFAULT NOW() 9) PARTITION BY LIST (geo_partition);Output

1CREATE TABLESetup table partition for

US1CREATE TABLE bank_transactions_us 2 PARTITION OF bank_transactions 3 (user_id, account_id, geo_partition, account_type, 4 amount, txn_type, created_at, 5 PRIMARY KEY (user_id HASH, account_id, geo_partition)) 6 FOR VALUES IN ('US') TABLESPACE us_west_2_tablespace;Output

1CREATE TABLESetup table partition for

EU1CREATE TABLE bank_transactions_eu 2 PARTITION OF bank_transactions 3 (user_id, account_id, geo_partition, account_type, 4 amount, txn_type, created_at, 5 PRIMARY KEY (user_id HASH, account_id, geo_partition)) 6 FOR VALUES IN ('EU') TABLESPACE eu_central_1_tablespace;Output

1CREATE TABLESetup table partition for

India1CREATE TABLE bank_transactions_india 2 PARTITION OF bank_transactions 3 (user_id, account_id, geo_partition, account_type, 4 amount, txn_type, created_at, 5 PRIMARY KEY (user_id HASH, account_id, geo_partition)) 6 FOR VALUES IN ('India') TABLESPACE ap_south_1_tablespace;Output

1CREATE TABLE(Optional) List all the relations (tables/partitions/etc.)

1\dOutput

1 List of relations 2 Schema | Name | Type | Owner 3--------+---------------------------+-------+---------- 4 public | bank_transactions | table | yugabyte 5 public | bank_transactions_eu | table | yugabyte 6 public | bank_transactions_india | table | yugabyte 7 public | bank_transactions_us | table | yugabyte 8(4 rows)(Optional) Get details of

bank_transactionstable1\d+ bank_transactionsOutput

1 Table "public.bank_transactions" 2 Column | Type | Collation | Nullable | Default | Storage | Stats target | Description 3---------------+-----------------------------+-----------+----------+---------+----------+--------------+------------- 4 user_id | integer | | not null | | plain | | 5 account_id | integer | | not null | | plain | | 6 geo_partition | character varying | | | | extended | | 7 account_type | character varying | | not null | | extended | | 8 amount | numeric | | not null | | main | | 9 txn_type | character varying | | not null | | extended | | 10 created_at | timestamp without time zone | | | now() | plain | | 11Partition key: LIST (geo_partition) 12Partitions: bank_transactions_eu FOR VALUES IN ('EU'), 13 bank_transactions_india FOR VALUES IN ('India'), 14 bank_transactions_us FOR VALUES IN ('US'),(Optional) On the Master UI, check the table configuration by clicking Home > Overview > (See all tables) or click here.

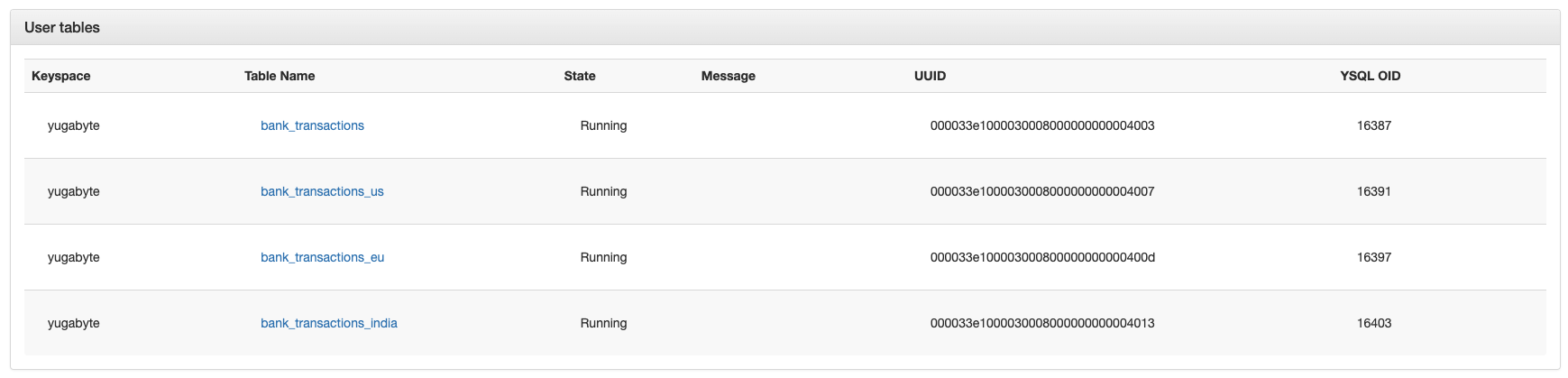

Screenshot

Click on

bank_transactionto see that table settings. Some of the things to note here are:Replication Config is same as the cluster level replication configuration.

1live_replicas { 2 num_replicas: 3 3 placement_blocks { 4 cloud_info { 5 placement_cloud: "aws" 6 placement_region: "eu-central-1" 7 placement_zone: "eu-central-1a" 8 } 9 min_num_replicas: 1 10 } 11 placement_blocks { 12 cloud_info { 13 placement_cloud: "aws" 14 placement_region: "ap-south-1" 15 placement_zone: "ap-south-1a" 16 } 17 min_num_replicas: 1 18 } 19 placement_blocks { 20 cloud_info { 21 placement_cloud: "aws" 22 placement_region: "us-west-2" 23 placement_zone: "us-west-2a" 24 } 25 min_num_replicas: 1 26 } 27}All the tablet groups are configured to be spread across multiple-regions (

us-west-2,eu-central-1andap-south-1) with IPs127.0.0.1,127.0.0.2and127.0.0.3.All the data going into this table by default will be spread across multiple-regions (

us-west-2,eu-central-1andap-south-1).

Back on the table list, click on

bank_transactions_euto see the partition configuration. Here, you will notice:Replication config is not same as the cluster level config. Instead its same as

replica_placementvalue in theeu_central_1_tablespacedefinition.1live_replicas { 2 num_replicas: 3 3 placement_blocks { 4 cloud_info { 5 placement_cloud: "aws" 6 placement_region: "eu-central-1" 7 placement_zone: "eu-central-1a" 8 } 9 min_num_replicas: 1 10 } 11 placement_blocks { 12 cloud_info { 13 placement_cloud: "aws" 14 placement_region: "eu-central-1" 15 placement_zone: "eu-central-1b" 16 } 17 min_num_replicas: 1 18 } 19 placement_blocks { 20 cloud_info { 21 placement_cloud: "aws" 22 placement_region: "eu-central-1" 23 placement_zone: "eu-central-1c" 24 } 25 min_num_replicas: 1 26 } 27}All the tablet groups are configured to be spread across nodes in

eu-central-1region (127.0.0.2,127.0.0.6and127.0.0.7).All the data going into this partition will be placed only in

eu-central-1regional nodes.

We have now finished setup for the table, partitions and tablespaces for all the regions now. Lets proceed with transaction simulations.

Scenario : Single Row Transaction

Launch YSQL Shell

1ysqlshCreate transaction record for

EU1INSERT INTO bank_transactions 2 VALUES (100, 10001, 'EU', 'checking', 120.50, 'debit');Output

1INSERT 0 1Examine

EUpartition1SELECT * FROM bank_transactions_eu;Output

1 user_id | account_id | geo_partition | account_type | amount | txn_type | created_at 2---------+------------+---------------+--------------+--------+----------+---------------------------- 3 100 | 10001 | EU | checking | 120.5 | debit | 2022-03-17 20:36:05.603271 4(1 row)And lets check other

Indiageo partitions also1SELECT COUNT(*) FROM bank_transactions_india;Output

1 count 2------- 3 0 4(1 row)Also check the

USpartition1SELECT COUNT(*) FROM bank_transactions_us;Output

1 count 2------- 3 0 4(1 row)

Scenario: Multiple Single Row Transactions

Lets create a multi-geo transactions. This is what you would in in a connection pool / connection reuse scenario of you application.

Copy/Paste one row at a time, otherwise output is mangled

1INSERT INTO bank_transactions 2 VALUES (200, 20001, 'India', 'savings', 2000, 'credit'); 3INSERT INTO bank_transactions 4 VALUES (300, 30001, 'US', 'checking', 105.25, 'debit');Output

1INSERT 0 1 2INSERT 0 1Lets look at the transaction in

Indiapartition1SELECT * FROM bank_transactions_india;Output

1 user_id | account_id | geo_partition | account_type | amount | txn_type | created_at 2---------+------------+---------------+--------------+--------+----------+---------------------------- 3 200 | 20001 | India | savings | 2000 | credit | 2022-03-17 22:37:10.318521 4(1 row)Lets look at the transaction in

USpartition1SELECT * FROM bank_transactions_us;Output

1 user_id | account_id | geo_partition | account_type | amount | txn_type | created_at 2---------+------------+---------------+--------------+--------+----------+---------------------------- 3 300 | 30001 | US | checking | 105.25 | debit | 2022-03-17 22:37:11.49277 4(1 row)Lets look at the whole table with the data locations

1SELECT tableoid::regclass, * FROM bank_transactions;Output

1 tableoid | user_id | account_id | geo_partition | account_type | amount | txn_type | created_at 2-------------------------+---------+------------+---------------+--------------+--------+----------+---------------------------- 3 bank_transactions_eu | 100 | 10001 | EU | checking | 120.5 | debit | 2022-03-17 20:36:05.603271 4 bank_transactions_india | 200 | 20001 | India | savings | 2000 | credit | 2022-03-17 22:37:10.318521 5 bank_transactions_us | 300 | 30001 | US | checking | 105.25 | debit | 2022-03-17 22:37:11.492779 6(3 rows)tableoid::regclassis a special expression in PostgresSQL for listing the location / partition of a row. Things to notice here are:- We can access all the transactions via table

bank_transactions. - We ensured that data for a geo (Example:

EU) is in the partition of that geoEUpartition. And,EUpartition is setup such that it will be created onEUtablespace.EUtablespace is configured to have its data place ineu-central-1region, acrosseu-central-1a,eu-central-1bandeu-central-1czone.

- We can access all the transactions via table

Scenario : Multi Row Transactions

Let try to multi-row transaction. Remember we are connected to

127.0.0.1node, which is inus-west-2a.Copy/Paste one row at a time, otherwise output is mangled

1BEGIN; 2INSERT INTO bank_transactions VALUES (100, 10002, 'EU', 'checking', 400.00, 'debit'); 3INSERT INTO bank_transactions VALUES (100, 10003, 'EU', 'checking', 400.00, 'credit'); 4COMMIT;Output

1ERROR: Illegal state: Nonlocal tablet accessed in local transaction: tablet <hex-number>: . Errors from tablet servers: [Illegal state (yb/client/transaction.cc:288): Nonlocal tablet accessed in local transaction: tablet <hex-number>]This fails, as we do not allows cross geo-transaction for multi-row transaction - by default. But we have inserted data for

EU,IndiaandSingaporethroughus-west-2anode, then why that worked? Well, this is because of optimization in YugabyteDB for single row transactions. As per the docs:1The transaction manager of YugabyteDB automatically detects transactions that 2update a single row (as opposed to transactions that update rows across tablets 3or nodes). In order to achieve high performance, the updates to a single row 4directly update the row without having to interact with the transaction status 5tablet using the single row transaction path, also called the fast path.Connect to a node in

eu-central-1.1\c - - 127.0.0.2Output

1You are now connected to database "yugabyte" as user "yugabyte" on host "127.0.0.2" at port "5433".Note: You could quit shell and connect to node via argument. But

\cis doing the same thing really.Let try again

Copy/Paste one row at a time, otherwise output is mangled

1BEGIN; 2INSERT INTO bank_transactions VALUES (100, 10002, 'EU', 'checking', 400.00, 'debit'); 3INSERT INTO bank_transactions VALUES (100, 10003, 'EU', 'checking', 400.00, 'credit'); 4COMMIT;Output

1BEGIN 2INSERT 0 1 3INSERT 0 1 4COMMITThat worked.

Lets check that transactions:

1select tableoid::regclass, * from bank_transactions where account_id in ('10002','10003');Output

1 tableoid | user_id | account_id | geo_partition | account_type | amount | txn_type | created_at 2----------------------+---------+------------+---------------+--------------+--------+----------+---------------------------- 3 bank_transactions_eu | 100 | 10002 | EU | checking | 400 | debit | 2022-03-17 22:43:37.235321 4 bank_transactions_eu | 100 | 10003 | EU | checking | 400 | credit | 2022-03-17 22:43:37.235321 5(2 rows)

Scenario : Multi Row - Multi region transactions

Sometimes you may need to run queries for cross region data spanning across multiple rows. From previous scenario we saw that we have to connect to an EU node for working with multiple rows in EU. Then, what about transactions spanning across geo?

Lets try to do a cross geo-transaction (US x EU) from a third geo (India).

Let connect to an

Indianode1\c - - 127.0.0.3Output

1You are now connected to database "yugabyte" as user "yugabyte" on host "127.0.0.3" at port "5433".Set

force_global_transactiontoTRUE1SET force_global_transaction = TRUE;Output

1SETLets run the cross geo transaction as follows

Copy/Paste one row at a time, otherwise output is mangled

1 BEGIN; 2 INSERT INTO bank_transactions VALUES (100, 10004, 'US', 'checking', 400.00, 'debit'); 3 INSERT INTO bank_transactions VALUES (200, 10005, 'EU', 'checking', 400.00, 'credit'); 4 COMMIT;Output

1BEGIN 2INSERT 0 1 3INSERT 0 1 4COMMITCheck the transactions

1select tableoid::regclass, * from bank_transactions where account_id in ('10004','10005');Output

1 tableoid | user_id | account_id | geo_partition | account_type | amount | txn_type | created_at 2----------------------+---------+------------+---------------+--------------+--------+----------+---------------------------- 3 bank_transactions_eu | 200 | 10005 | EU | checking | 400 | credit | 2022-03-17 22:52:33.346504 4 bank_transactions_us | 100 | 10004 | US | checking | 400 | debit | 2022-03-17 22:52:33.346504 5(2 rows)

Scenario : Add new region

Quit YSQL shell

1quit;Add tablet server for a new region - South America(sa-east-1)

1yb-ctl --data_dir $PWD/data add_node --placement_info "aws.sa-east-1.sa-east-1a" 2yb-ctl --data_dir $PWD/data add_node --placement_info "aws.sa-east-1.sa-east-1b" 3yb-ctl --data_dir $PWD/data add_node --placement_info "aws.sa-east-1.sa-east-1c"Output

1Adding node. 2Waiting for cluster to be ready. 3---------------------------------------------------------------------------------------------------- 4| Node 10: yb-tserver (pid 96765) | 5---------------------------------------------------------------------------------------------------- 6| JDBC : jdbc:postgresql://127.0.0.10:5433/yugabyte | 7| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.10 | 8| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.10 | 9| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.10 | 10| data-dir[0] : /tmp/demo/data/node-10/disk-1/yb-data | 11| yb-tserver Logs : /tmp/demo/data/node-10/disk-1/yb-data/tserver/logs | 12---------------------------------------------------------------------------------------------------- 13Adding node. 14Waiting for cluster to be ready. 15---------------------------------------------------------------------------------------------------- 16| Node 11: yb-tserver (pid 96826) | 17---------------------------------------------------------------------------------------------------- 18| JDBC : jdbc:postgresql://127.0.0.11:5433/yugabyte | 19| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.11 | 20| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.11 | 21| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.11 | 22| data-dir[0] : /tmp/demo/data/node-11/disk-1/yb-data | 23| yb-tserver Logs : /tmp/demo/data/node-11/disk-1/yb-data/tserver/logs | 24---------------------------------------------------------------------------------------------------- 25Adding node. 26Waiting for cluster to be ready. 27---------------------------------------------------------------------------------------------------- 28| Node 12: yb-tserver (pid 96895) | 29---------------------------------------------------------------------------------------------------- 30| JDBC : jdbc:postgresql://127.0.0.12:5433/yugabyte | 31| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.12 | 32| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.12 | 33| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.12 | 34| data-dir[0] : /tmp/demo/data/node-12/disk-1/yb-data | 35| yb-tserver Logs : /tmp/demo/data/node-12/disk-1/yb-data/tserver/logs | 36----------------------------------------------------------------------------------------------------Check cluster status

1yb-ctl status --data_dir $PWD/dataOutput

1---------------------------------------------------------------------------------------------------- 2| Node Count: 12 | Replication Factor: 3 | 3---------------------------------------------------------------------------------------------------- 4| JDBC : jdbc:postgresql://127.0.0.1:5433/yugabyte | 5| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh | 6| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh | 7| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli | 8| Web UI : http://127.0.0.1:7000/ | 9| Cluster Data : /tmp/demo/data | 10---------------------------------------------------------------------------------------------------- 11---------------------------------------------------------------------------------------------------- 12| Node 1: yb-tserver (pid 90648), yb-master (pid 90639) | 13---------------------------------------------------------------------------------------------------- 14| JDBC : jdbc:postgresql://127.0.0.1:5433/yugabyte | 15| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh | 16| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh | 17| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli | 18| data-dir[0] : /tmp/demo/data/node-1/disk-1/yb-data | 19| yb-tserver Logs : /tmp/demo/data/node-1/disk-1/yb-data/tserver/logs | 20| yb-master Logs : /tmp/demo/data/node-1/disk-1/yb-data/master/logs | 21---------------------------------------------------------------------------------------------------- 22---------------------------------------------------------------------------------------------------- 23| Node 2: yb-tserver (pid 90651), yb-master (pid 90642) | 24---------------------------------------------------------------------------------------------------- 25| JDBC : jdbc:postgresql://127.0.0.2:5433/yugabyte | 26| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.2 | 27| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.2 | 28| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.2 | 29| data-dir[0] : /tmp/demo/data/node-2/disk-1/yb-data | 30| yb-tserver Logs : /tmp/demo/data/node-2/disk-1/yb-data/tserver/logs | 31| yb-master Logs : /tmp/demo/data/node-2/disk-1/yb-data/master/logs | 32---------------------------------------------------------------------------------------------------- 33---------------------------------------------------------------------------------------------------- 34| Node 3: yb-tserver (pid 90654), yb-master (pid 90645) | 35---------------------------------------------------------------------------------------------------- 36| JDBC : jdbc:postgresql://127.0.0.3:5433/yugabyte | 37| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.3 | 38| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.3 | 39| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.3 | 40| data-dir[0] : /tmp/demo/data/node-3/disk-1/yb-data | 41| yb-tserver Logs : /tmp/demo/data/node-3/disk-1/yb-data/tserver/logs | 42| yb-master Logs : /tmp/demo/data/node-3/disk-1/yb-data/master/logs | 43---------------------------------------------------------------------------------------------------- 44---------------------------------------------------------------------------------------------------- 45| Node 4: yb-tserver (pid 91265) | 46---------------------------------------------------------------------------------------------------- 47| JDBC : jdbc:postgresql://127.0.0.4:5433/yugabyte | 48| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.4 | 49| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.4 | 50| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.4 | 51| data-dir[0] : /tmp/demo/data/node-4/disk-1/yb-data | 52| yb-tserver Logs : /tmp/demo/data/node-4/disk-1/yb-data/tserver/logs | 53---------------------------------------------------------------------------------------------------- 54---------------------------------------------------------------------------------------------------- 55| Node 5: yb-tserver (pid 91323) | 56---------------------------------------------------------------------------------------------------- 57| JDBC : jdbc:postgresql://127.0.0.5:5433/yugabyte | 58| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.5 | 59| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.5 | 60| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.5 | 61| data-dir[0] : /tmp/demo/data/node-5/disk-1/yb-data | 62| yb-tserver Logs : /tmp/demo/data/node-5/disk-1/yb-data/tserver/logs | 63---------------------------------------------------------------------------------------------------- 64---------------------------------------------------------------------------------------------------- 65| Node 6: yb-tserver (pid 91385) | 66---------------------------------------------------------------------------------------------------- 67| JDBC : jdbc:postgresql://127.0.0.6:5433/yugabyte | 68| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.6 | 69| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.6 | 70| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.6 | 71| data-dir[0] : /tmp/demo/data/node-6/disk-1/yb-data | 72| yb-tserver Logs : /tmp/demo/data/node-6/disk-1/yb-data/tserver/logs | 73---------------------------------------------------------------------------------------------------- 74---------------------------------------------------------------------------------------------------- 75| Node 7: yb-tserver (pid 91449) | 76---------------------------------------------------------------------------------------------------- 77| JDBC : jdbc:postgresql://127.0.0.7:5433/yugabyte | 78| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.7 | 79| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.7 | 80| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.7 | 81| data-dir[0] : /tmp/demo/data/node-7/disk-1/yb-data | 82| yb-tserver Logs : /tmp/demo/data/node-7/disk-1/yb-data/tserver/logs | 83---------------------------------------------------------------------------------------------------- 84---------------------------------------------------------------------------------------------------- 85| Node 8: yb-tserver (pid 91495) | 86---------------------------------------------------------------------------------------------------- 87| JDBC : jdbc:postgresql://127.0.0.8:5433/yugabyte | 88| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.8 | 89| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.8 | 90| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.8 | 91| data-dir[0] : /tmp/demo/data/node-8/disk-1/yb-data | 92| yb-tserver Logs : /tmp/demo/data/node-8/disk-1/yb-data/tserver/logs | 93---------------------------------------------------------------------------------------------------- 94---------------------------------------------------------------------------------------------------- 95| Node 9: yb-tserver (pid 91549) | 96---------------------------------------------------------------------------------------------------- 97| JDBC : jdbc:postgresql://127.0.0.9:5433/yugabyte | 98| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.9 | 99| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.9 | 100| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.9 | 101| data-dir[0] : /tmp/demo/data/node-9/disk-1/yb-data | 102| yb-tserver Logs : /tmp/demo/data/node-9/disk-1/yb-data/tserver/logs | 103---------------------------------------------------------------------------------------------------- 104---------------------------------------------------------------------------------------------------- 105| Node 10: yb-tserver (pid 96765) | 106---------------------------------------------------------------------------------------------------- 107| JDBC : jdbc:postgresql://127.0.0.10:5433/yugabyte | 108| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.10 | 109| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.10 | 110| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.10 | 111| data-dir[0] : /tmp/demo/data/node-10/disk-1/yb-data | 112| yb-tserver Logs : /tmp/demo/data/node-10/disk-1/yb-data/tserver/logs | 113---------------------------------------------------------------------------------------------------- 114---------------------------------------------------------------------------------------------------- 115| Node 11: yb-tserver (pid 96826) | 116---------------------------------------------------------------------------------------------------- 117| JDBC : jdbc:postgresql://127.0.0.11:5433/yugabyte | 118| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.11 | 119| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.11 | 120| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.11 | 121| data-dir[0] : /tmp/demo/data/node-11/disk-1/yb-data | 122| yb-tserver Logs : /tmp/demo/data/node-11/disk-1/yb-data/tserver/logs | 123---------------------------------------------------------------------------------------------------- 124---------------------------------------------------------------------------------------------------- 125| Node 12: yb-tserver (pid 96895) | 126---------------------------------------------------------------------------------------------------- 127| JDBC : jdbc:postgresql://127.0.0.12:5433/yugabyte | 128| YSQL Shell : yugabyte-2.13.0.0/bin/ysqlsh -h 127.0.0.12 | 129| YCQL Shell : yugabyte-2.13.0.0/bin/ycqlsh 127.0.0.12 | 130| YEDIS Shell : yugabyte-2.13.0.0/bin/redis-cli -h 127.0.0.12 | 131| data-dir[0] : /tmp/demo/data/node-12/disk-1/yb-data | 132| yb-tserver Logs : /tmp/demo/data/node-12/disk-1/yb-data/tserver/logs | 133----------------------------------------------------------------------------------------------------Launch SQL Shell

1ysqlshOutput

1ysqlsh (11.2-YB-2.13.0.0-b0) 2Type "help" for help. 3 4yugabyte=#Create a tablespace that spans across new region/zones

1 2CREATE TABLESPACE sa_east_1_tablespace WITH ( 3 replica_placement='{"num_replicas": 3, "placement_blocks": 4 [{"cloud":"aws","region":"sa-east-1","zone":"sa-east-1a","min_num_replicas":1}, 5 {"cloud":"aws","region":"sa-east-1","zone":"sa-east-1b","min_num_replicas":1}, 6 {"cloud":"aws","region":"sa-east-1","zone":"sa-east-1c","min_num_replicas":1}]}' 7 );Output

1CREATE TABLESPACE(Optional) List all the tablespaces

1\db\Output

1 List of tablespaces 2 Name | Owner | Location 3-------------------------+----------+---------- 4 ap_south_1_tablespace | yugabyte | 5 eu_central_1_tablespace | yugabyte | 6 pg_default | postgres | 7 pg_global | postgres | 8 sa_east_1_tablespace | yugabyte | 9 us_west_2_tablespace | yugabyte | 10(6 rows)Create partition on

bank_transactionstable that uses new tablespacesa_east_1_tablespace1CREATE TABLE bank_transactions_brazil 2 PARTITION OF bank_transactions 3 (user_id, account_id, geo_partition, account_type, 4 amount, txn_type, created_at, 5 PRIMARY KEY (user_id HASH, account_id, geo_partition)) 6 FOR VALUES IN ('Brazil') TABLESPACE sa_east_1_tablespace;Output

1CREATE TABLE(Optional) List all the relations (tables/partitions/etc.)

1\dOutput

1 List of relations 2 Schema | Name | Type | Owner 3--------+--------------------------+-------+---------- 4 public | bank_transactions | table | yugabyte 5 public | bank_transactions_brazil | table | yugabyte 6 public | bank_transactions_eu | table | yugabyte 7 public | bank_transactions_india | table | yugabyte 8 public | bank_transactions_us | table | yugabyte 9(5 rows)(Optional) Get details of

bank_transactionstable1\d+ bank_transactionsOutput

1 Table "public.bank_transactions" 2 Column | Type | Collation | Nullable | Default | Storage | Stats target | Description 3---------------+-----------------------------+-----------+----------+---------+----------+--------------+------------- 4 user_id | integer | | not null | | plain | | 5 account_id | integer | | not null | | plain | | 6 geo_partition | character varying | | | | extended | | 7 account_type | character varying | | not null | | extended | | 8 amount | numeric | | not null | | main | | 9 txn_type | character varying | | not null | | extended | | 10 created_at | timestamp without time zone | | | now() | plain | | 11Partition key: LIST (geo_partition) 12Partitions: bank_transactions_brazil FOR VALUES IN ('Brazil'), 13 bank_transactions_eu FOR VALUES IN ('EU'), 14 bank_transactions_india FOR VALUES IN ('India'), 15 bank_transactions_us FOR VALUES IN ('US')Insert transactions

1INSERT INTO bank_transactions 2 VALUES (400, 40001, 'Brazil', 'savings', 1000, 'credit');Check record transactions

1select tableoid::regclass, * from bank_transactions;Output

1 tableoid | user_id | account_id | geo_partition | account_type | amount | txn_type | created_at 2--------------------------+---------+------------+---------------+--------------+--------+----------+---------------------------- 3 bank_transactions_brazil | 400 | 40001 | Brazil | savings | 1000 | credit | 2022-03-17 23:18:48.225731 4 bank_transactions_eu | 200 | 10005 | EU | checking | 400 | credit | 2022-03-17 22:52:33.346504 5 bank_transactions_eu | 100 | 10001 | EU | checking | 120.5 | debit | 2022-03-17 20:36:05.603271 6 bank_transactions_eu | 100 | 10002 | EU | checking | 400 | debit | 2022-03-17 22:43:37.235321 7 bank_transactions_eu | 100 | 10003 | EU | checking | 400 | credit | 2022-03-17 22:43:37.235321 8 bank_transactions_india | 200 | 20001 | India | savings | 2000 | credit | 2022-03-17 22:37:10.318521 9 bank_transactions_us | 100 | 10004 | US | checking | 400 | debit | 2022-03-17 22:52:33.346504 10 bank_transactions_us | 300 | 30001 | US | checking | 105.25 | debit | 2022-03-17 22:37:11.492779 11(8 rows)

Scenario : Transaction for Unknown Geo

Lets try to insert a record for a

Singaporeand see what happens1 INSERT INTO bank_transactions 2 VALUES (500, 50001, 'Singapore', 'savings', 2000, 'credit');Output

1ERROR: no partition of relation "bank_transactions" found for row 2DETAIL: Partition key of the failing row contains (geo_partition) = (Singapore).This is because there is no

defaultpartition on the table.Lets create a default partition on

bank_transactions1CREATE TABLE bank_transactions_default 2 PARTITION OF bank_transactions 3 (user_id, account_id, geo_partition, account_type, 4 amount, txn_type, created_at, 5 PRIMARY KEY (user_id HASH, account_id, geo_partition)) 6 DEFAULT;Output

1CREATE TABLE(Optional) List all the relations (tables/partitions/etc.)

1\dOutput

1 List of relations 2 Schema | Name | Type | Owner 3--------+---------------------------+-------+---------- 4 public | bank_transactions | table | yugabyte 5 public | bank_transactions_brazil | table | yugabyte 6 public | bank_transactions_default | table | yugabyte 7 public | bank_transactions_eu | table | yugabyte 8 public | bank_transactions_india | table | yugabyte 9 public | bank_transactions_us | table | yugabyte 10(6 rows)(Optional) Get details of

bank_transactionstable1\d+ bank_transactionsOutput

1 Table "public.bank_transactions" 2 Column | Type | Collation | Nullable | Default | Storage | Stats target | Description 3---------------+-----------------------------+-----------+----------+---------+----------+--------------+------------- 4 user_id | integer | | not null | | plain | | 5 account_id | integer | | not null | | plain | | 6 geo_partition | character varying | | | | extended | | 7 account_type | character varying | | not null | | extended | | 8 amount | numeric | | not null | | main | | 9 txn_type | character varying | | not null | | extended | | 10 created_at | timestamp without time zone | | | now() | plain | | 11Partition key: LIST (geo_partition) 12Partitions: bank_transactions_brazil FOR VALUES IN ('Brazil'), 13 bank_transactions_eu FOR VALUES IN ('EU'), 14 bank_transactions_india FOR VALUES IN ('India'), 15 bank_transactions_us FOR VALUES IN ('US'), 16 bank_transactions_default DEFAULTAs you can see we have a new partition for

DEFAULTNow, lets try to insert a

Singaporetransaction again and see what happens.1 INSERT INTO bank_transactions 2 VALUES (500, 50001, 'Singapore', 'savings', 2000, 'credit');Output

1INSERT 0 1Lets look at the whole table with the data locations

1SELECT tableoid::regclass, * FROM bank_transactions;Output

1 tableoid | user_id | account_id | geo_partition | account_type | amount | txn_type | created_at 2---------------------------+---------+------------+---------------+--------------+--------+----------+---------------------------- 3 bank_transactions_brazil | 400 | 40001 | Brazil | savings | 1000 | credit | 2022-03-17 23:18:48.225731 4 bank_transactions_eu | 200 | 10005 | EU | checking | 400 | credit | 2022-03-17 22:52:33.346504 5 bank_transactions_eu | 100 | 10001 | EU | checking | 120.5 | debit | 2022-03-17 20:36:05.603271 6 bank_transactions_eu | 100 | 10002 | EU | checking | 400 | debit | 2022-03-17 22:43:37.235321 7 bank_transactions_eu | 100 | 10003 | EU | checking | 400 | credit | 2022-03-17 22:43:37.235321 8 bank_transactions_india | 200 | 20001 | India | savings | 2000 | credit | 2022-03-17 22:37:10.318521 9 bank_transactions_us | 100 | 10004 | US | checking | 400 | debit | 2022-03-17 22:52:33.346504 10 bank_transactions_us | 300 | 30001 | US | checking | 105.25 | debit | 2022-03-17 22:37:11.492779 11 bank_transactions_default | 500 | 50001 | Singapore | savings | 2000 | credit | 2022-03-17 23:26:28.548771 12(9 rows)So, record for

Singaporeis placed on the default partition.(Optional) Open Master UI / Table List, click

bank_transactions_default. In the "Replication Info" you see following json:1live_replicas { 2 num_replicas: 3 3 placement_blocks { 4 cloud_info { 5 placement_cloud: "aws" 6 placement_region: "eu-central-1" 7 placement_zone: "eu-central-1a" 8 } 9 min_num_replicas: 1 10 } 11 placement_blocks { 12 cloud_info { 13 placement_cloud: "aws" 14 placement_region: "ap-south-1" 15 placement_zone: "ap-south-1a" 16 } 17 min_num_replicas: 1 18 } 19 placement_blocks { 20 cloud_info { 21 placement_cloud: "aws" 22 placement_region: "us-west-2" 23 placement_zone: "us-west-2a" 24 } 25 min_num_replicas: 1 26 } 27}This is same as the cluster level replication config, since we did not create any tablespace for the

bank_transactions_defaultpartition.

Cleanup

Quit YSQL Shell

1\qDestroy cluster

1yb-ctl destroy --data_dir $PWD/dataOutput

1Destroying cluster.

Want to Learn More?

- Join DSS Asia on March 30-31. Register today at asia.distributedsql.org

- Join YugabyteDB - Community Slack alt

- Watch YFTT - YugabyteDB Friday Tech Talk

- Get a free training and certification at Yugabyte University

- Sign Up for Free Yugabyte Cloud account

Original Source: YB Docs for Row Level Geo Partitioning